Who's afraid of the big bad AI?

AI geopolitics, Clubhouse & Signal in Austria, Bitcoin Footprint

🧠 Thoughts & Readings

Every AI system has at some point been developed by a human. And every human, even an evil dictator, has an incentive not to hand over total control to an AI agent. However, even so, future AI systems could influence our daily lives in ways unforeseen by its creators, potentially even without us noticing [1].

Formalizing the Argument of Ignored Attributes in a Utility Function - Consequences of Misaligned AI

One intuition for why powerful AI systems might lead to bad consequences goes as follows:

1) Humans care about many attributes of the world and we would likely forget some of these when trying to list them all.

2) Improvements along these attributes usually require resources (e.g. money), and gaining additional resources often requires sacrifices along some attributes.

3) Because of 1), naively deployed AI systems would only optimize some of the attributes we care about, and because of 2) this would lead to bad outcomes along the other attributes.

A new breakthrough in AI (e.g. think of DALL:E’s avocado chairs) easily gets people excited. An improvement in the explainability and fairness of an algorithm less so. Also on a global level the incentive structure in AI research works against the development of trustworthy agents.

If we assume there are only two countries conducting cutting-edge AI research (think China vs. U.S.) and take into the consideration the power advantage of being the first one to develop a super high-performing model, then there will always be an incentive fore each country to opt for speed and compromise on ethics - you basically end up with a prisoners/security dilemma [2].

The strategic and security implications of AI

And then of course that incentivizes the other countries to develop in a similar way. So that’s sort of the way that I think about what sometimes gets called a race to the bottom on safety is, how are kind of countries that are really trying to be sort of thoughtful and rational about how they’re developing these technologies, but they’re also really valuing their own defense and their own security. Are they going to feel able to be cautious and to take the time to build in safety and robustness and reliability and interpretability into their systems, or are they going to feel the need to go faster?

Preliminary Conclusions:

The future of AI (and it’s ethical standards) is closely intertwined with geopolitics

While topic such as AI alignment, explainability & design are getting increasingly more attention there is still heaps of stuff about the effects of large scale deployment of autonomous agents that humanity does not have a clue about

AI regulation is a bit like the climate agreement - everybody wants the future to be safe but nobody wants to restrict their own behavior. There is a need for ideas/good negotiators to develop standards acceptable for countries worldwide.

And while there’s no such thing as a perfectly safe technology, there’s a world of difference between “I didn’t mean to” and “I actively tried to avoid”. [3]

Regulatory markets seem to be an interesting starting point. [4]

🤓 Learnings

Currently learning about: Personal Finance & Sustainable Investing 💸

Annually, Bitcoin uses more electricity than the state of Jordan [5]

While there is no scientific consensus as of now how big the impact of cryptocurrencies on our climate is (research ranges from ‘Bitcoin alone can push global warming above 2 degrees C until 2033’ to ‘Bitcoin's climate change impact may be much smaller than we thought’), we do know for certain that it has a large negative impact on our environment. Does a crypto investment turn one into a hypocrite when you simultaneously say you care about the environment? [6]

✨ Random

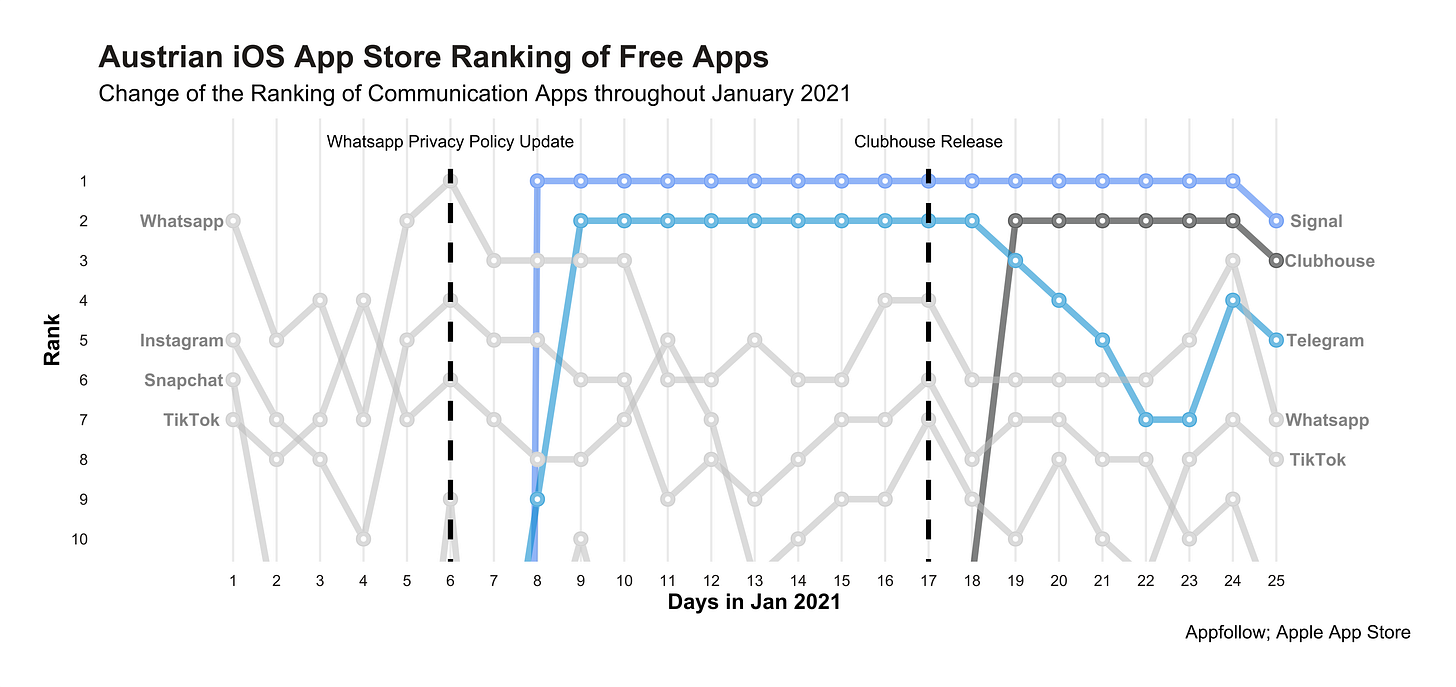

Digged out some statistics about the last month’s rankings in Austria’s Apple App Store. Looks like Whatsapp has been disrupted?

[1] More on AI dystopias: Agency Failure AI Apocalypse? , What Failure Looks Like

[2] Prisoner’s Dilemma explained.

[3] When are we going to start designing AI with purpose?

[4] Regulatory Markets and International Standards as a Means of Ensuring Beneficial AI